SLAM is used for positioning it self in a position and do the map at the same. This map could be used for path planning and obstacle avoidance for automated vehicles.

Learn about all the SLAM related terms

SLAM = Simultaneous localizaiton and mapping

LiDAR = Light detection and ranging

AUV = Unnamed autonomous vehicle

IMU = Inertial Measurement Unit

vSLAM = Visual SLAM

ToF = Time of Flight. A range imaging camera

Point cloud data = The output of LiDAR sensor. The point could be 2D(x, y) or 3D(x, y, z).

ICP = Iterative closes point

NDT = Normal distribution transform. An algorithm used for matching data from point cloud

PnP = Perspective-n-point

TAM = Tracking and mapping

PTAM = Parallel tracking and mapping

EKF = Extended Kalman feature. It's an algorithm

DoF = Degree of freedom. This is used EKF

BA = Bundle adjustment

Rolling shutter = Rolling shutter is a method of image capture in which a still picture (in a still camera) or each frame of a video (in a video camera) is captured not by taking a snapshot of the entire scene at a single instant in time but rather by scanning across the scene rapidly, vertically, horizontally or rotationally

ORB-SLAM = Oriented FAST and Roated BRIEF

LSD-SLAM = Large scale direct monocular SLAM

DSO-SLAM = Direct sparse odometry SLAM

BoW = Bag of words

SIFT = Scale invarient feature transform

SURF = Speeded up robust feature

LSTM = Long short-term memory

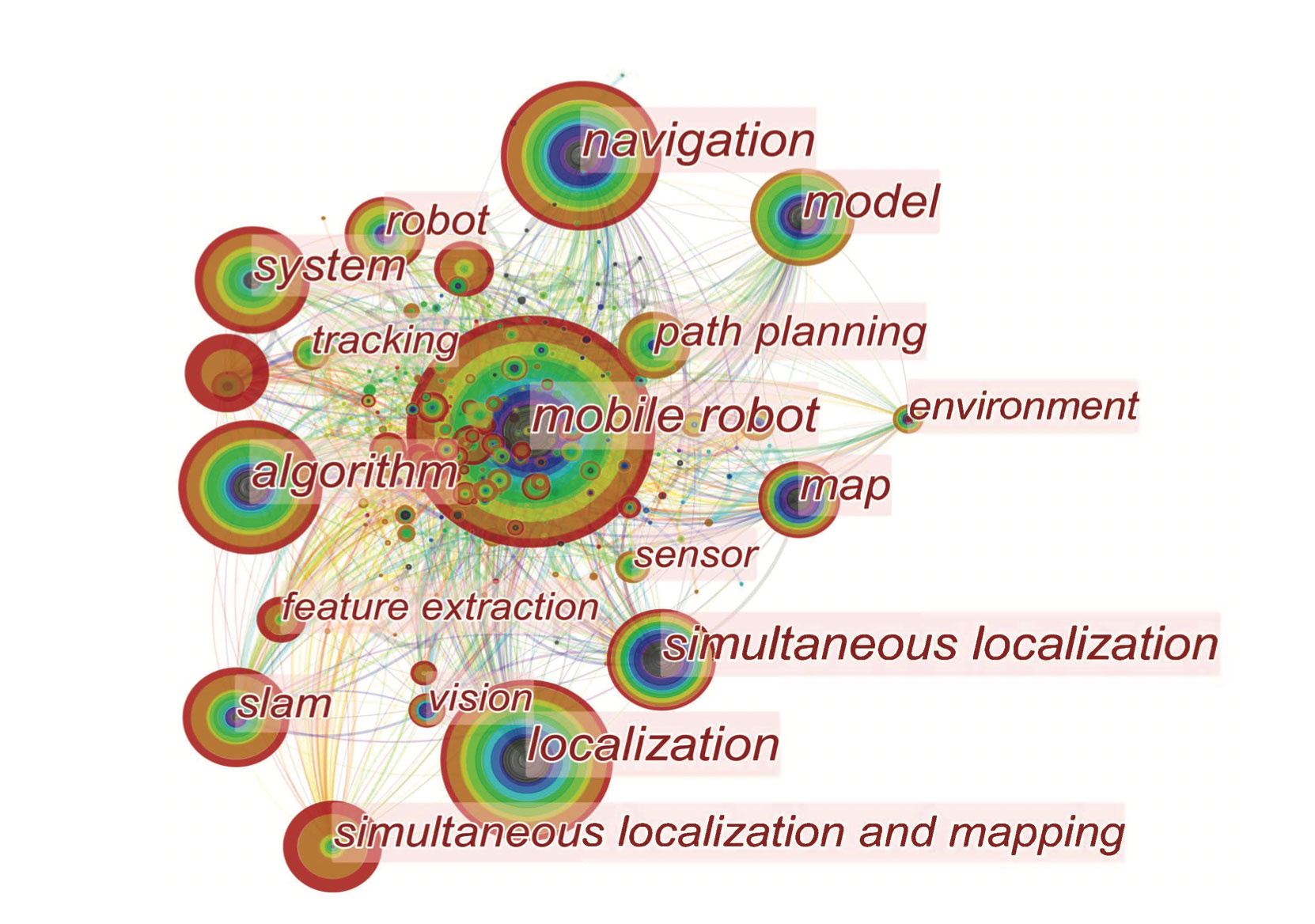

How many parts the SLAM could be divided into?

SLAM could be divided into five part

How many parts V-SLAM is categorized into?

V-SLAM is categorized into two parts

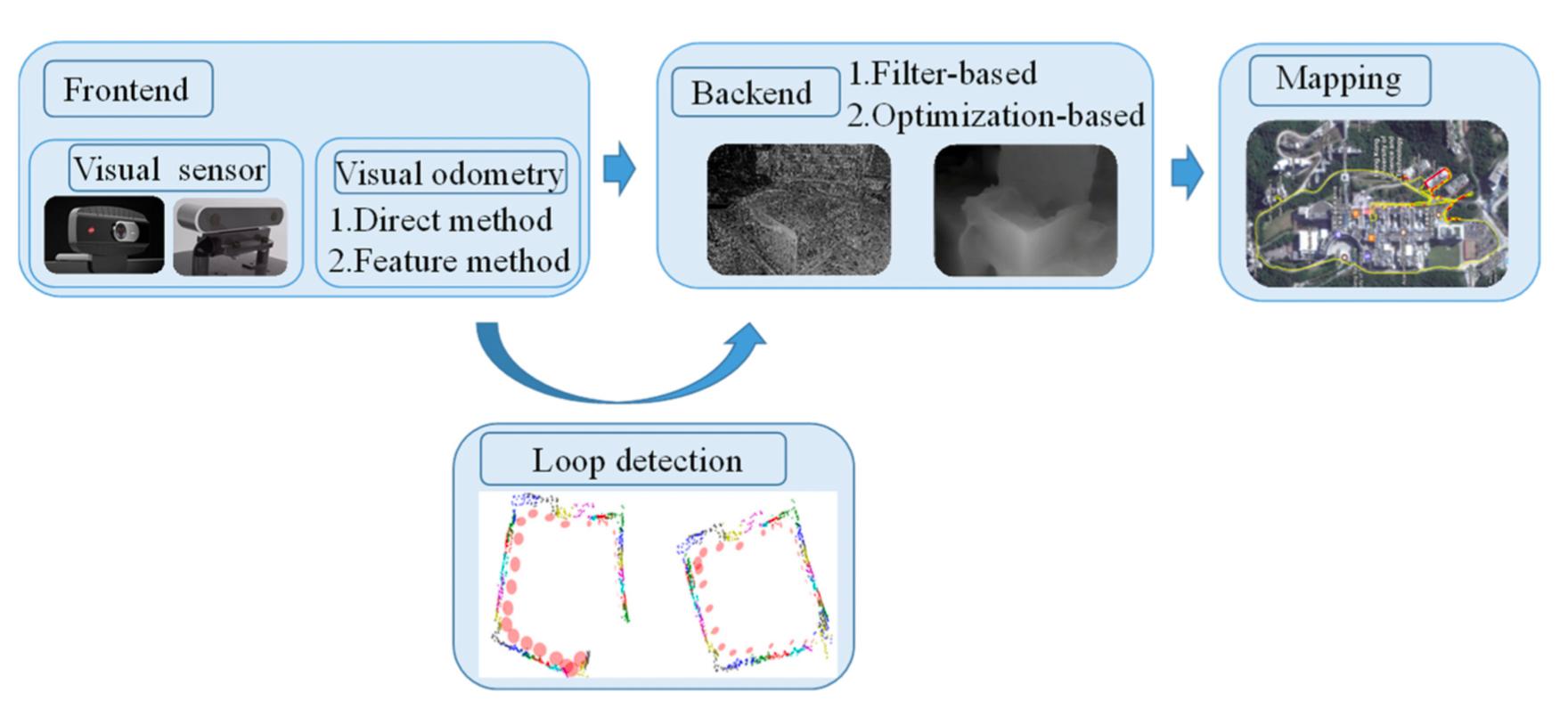

Frontend is responsible for collecting data from images using visual sensors and transmit data into visual odometer which estimates the data of the adjacent images and forms a local map.

And backend is responsible for optimizing the image data and produce a complete map.

What's the purpose of the loop detection?

The purpose of the loop detection is to check the positions the robot has walked are coincident before and after to avoid drift.

What's the problem of monocular cameras?

The problem is lack of depth information and when images are scaled they appear as blurry.

What about the stereo cameras?

Stereo cameras can obtain depth information indoors and outdoors using four steps of calibration, correction, matching and calculation. But it requires a huge calculation.

What about the RBG-D cameras?

They have image color and depth information. RGB-D cameras uses structured light and time of light technology (ToF).

How does visual odometer collect information?

Visual odometer uses feature point based or direct method to extract features to form a local map.

How does feature point extracting work?

Feature point method extract sparse features and completes a frame matching using a descriptor and calculate position according to the contraints relationship among the elements.

What does ORB-SLAM use?

It uses feature-point extract method. Feature-points are highly stable and used more.

What does the backend do in SLAM?

Backend optimization is essentially a state estimation problem which uses filter based(KF or Kalman Filter) or non-linear optimization method.

What is the problem of KF?

Real time performance optimization and there are lot of errors.

What's the problem of EKF

The problem of EKF appears more in non-linear and non-Gaussian systems.

What's happening to filter-based systems?

Filter based systems are being replaced by the graph-based systems.

What are the common methods of loop detection?

The common methods are

Which one is the most accurate?

Image to image has the most accuracy since it tries to match latest and previous image using Cummin's method.

What does bag of words refer to?

It refers to a technology that can use a visual vocabulary tree to turn the content of a picture into a digitial vector for transmission.

What are some common feature-point method?

are the most common method of feature-point.

What about ORB?

It uses FAST(Features from accelerated segment test) as feature detector and BRIEF(Binary Robust Independent Elementary Features) as descriptor. BRIEF eventually is a string to describe features. BRIEF improves real-time data extraction and feature detection. ORB methods are used a lot in V-SLAM due to fast calculation.

What is selection of keyframes?

Keyframes play a role in filtering to prevent useless or wrong information from entering the optimization and damaging the positioning construction’s accuracy. Keyframe selection plays significant role in reducing backend pressure.